About the Post

This blog post was basically my project for "Distributed Computing". I took this course in Fall 2011, which was my final semester of my graduate studies. This project was basically a group project and therefore I find it necessary to quote my group members involved in this project. Alongside me, following were my group mates:

Problem

Another example is the algorithm that creates the spanning tree of regions. Once the regional spanning tree is created, the clusters within the regions will be aware of which other regions they are responsible for updating to ensure data consistency across all regions.

As we know that each ring represents a shard (horizontal partition) of the resource records and the data servers are repositories of the resource records. The data in a ring is replicated among all the data servers which are part of the ring. The process is as follows:

This blog post was basically my project for "Distributed Computing". I took this course in Fall 2011, which was my final semester of my graduate studies. This project was basically a group project and therefore I find it necessary to quote my group members involved in this project. Alongside me, following were my group mates:

- Justin Roberts

- Darshan Lathia

Problem

The problem we were trying to resolve was an open ended problem. We have to design a distributed architecture that can support a resource discovery system, which handles millions of request for resource discovery. For example: a user wants to access any particular resource on the cloud. Now this resource is available in different locations on the internet. Before utilizing the resource, the user has to locate it. After which the user can use the resource for a specific task. The purpose of this project was to design a system that allows a user to locate a resource. The system should be able to handle huge traffic coming from all over the world. The system should be scale-able and robust to handle the work load and should be fault tolerant to be able to serve requests, in an event something goes bad or crashes.

Introduction

In this paper, we explore the inner workings of our architecture. This architecture is a complex layering of protocols which individually solve various problems, but all work together to serve the common purpose of resource discovery in a massively distributed system. We intend to analyze our algorithms by providing a small example of how they function, and showing that each one is a robust solution for accomplishing its intended task. Finally, we will conclude by explaining our simulation strategy, and how we would accomplish a simulation if we were to fully develop our distributed system concept.

Overview of Architecture

Introduction

In this paper, we explore the inner workings of our architecture. This architecture is a complex layering of protocols which individually solve various problems, but all work together to serve the common purpose of resource discovery in a massively distributed system. We intend to analyze our algorithms by providing a small example of how they function, and showing that each one is a robust solution for accomplishing its intended task. Finally, we will conclude by explaining our simulation strategy, and how we would accomplish a simulation if we were to fully develop our distributed system concept.

Overview of Architecture

|

| Figure 1 - Overall Architecture |

In Figure 1, we present the top level overview of our regions. Whenever a client makes a request for any hardware or software resource, the request first lands at the DNS (the request will be made to a domain name or domain names). The DNS server will have a list of IP addresses corresponding to the domain name specified in the request. The request is then routed to any of the IP addresses (in a random or round robin fashion). The IP addresses represent routers. These routers when receiving a request will forward the request to an appropriate regional server based on the location of the client (location identified by the clients IP address). A client's request will be routed to a region that is closest to his location (based on his location).

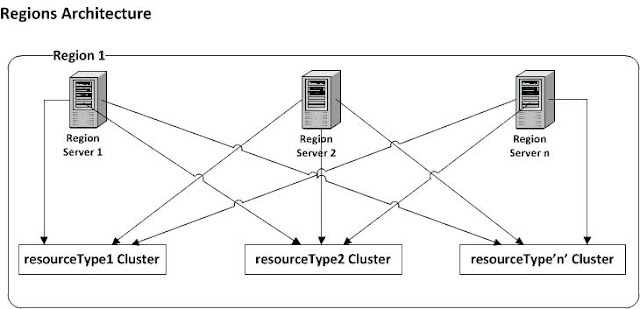

A region consists of regional servers and clusters. The number of regional servers depends on the load of requests from the client in that particular region (they are auto-scaleable). There is one resource cluster for each of the available resource types (for example, one cluster could be entirely devoted to the Microsoft resources). The regional server looks for the Resource Type in the requests from the client and accordingly forwards it to on of the Cluster Servers (belonging to the Resource Type Cluster), again in a round robin fashion. All the regional servers of a particular region are connected to every cluster in that region, so if one of the regional servers fails the system will continue to function due to the redundant links.

Each cluster is made up of a number of cluster servers (auto-scaleable) and replication server rings. The cluster servers will receive the client requests from the regional servers and then forward the requests to the any replication server (in the appropriate ring), based on the ResourceID. The replication servers are arranged in multiple rings where each ring can be accessed by any of the cluster servers. Each ring in a cluster represents a shard (sharding done based on the resource ID) and each replication server contains resource locations. The replication servers inside a ring are mirrors,

that is they contain the same information as all the other replication servers in the same ring (eventually consistent). Replication servers will contain all the resource discovery data required by clients. Clients can read or write on any of the replication server. Whenever update operation is performed by the client on any of the replication servers, the update is synchronized in that ring and then sent to the cluster server for replicating the change in the other rings of clusters of other region. As the load on a particular server increases, the number of cluster servers can be increased to handle multiple requests. If one of the cluster server fails, the other cluster servers will handle the requests coming from the client. So this architecture is fault tolerant as well as scalable.

Figure 4 shows that if there is an Update in any of the replication servers then the other servers should also be updated accordingly. So, whenever there is an update in one of the servers, it acts as the leader of the bidirectional ring, and sends the updates to its neighboring servers. In this way all the servers in the ring will remain synchronized.

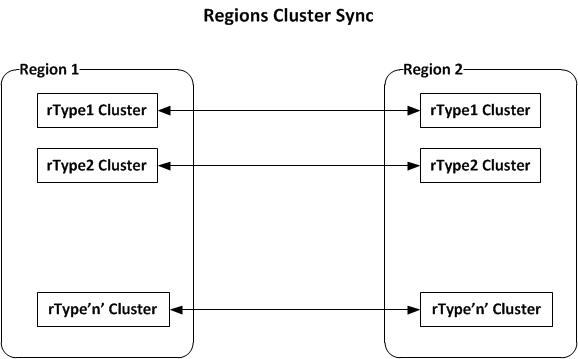

As already mentioned above, each region consists of clusters for every Resource Type. The other regions also have an identical set of clusters. The clusters of the same Resource type in different regions are synchronized so all the data updated in one cluster is passed on to every other cluster of the same resource type. Since all clusters are synched, if a cluster of a particular region fails or even if the whole region is down, client requests can be redirected to the next closest region. More details on cluster synchronization will be provided at a later time.

|

| Figure 2 - Region Architecture |

|

| Figure 3 - Cluster Architecture |

Each cluster is made up of a number of cluster servers (auto-scaleable) and replication server rings. The cluster servers will receive the client requests from the regional servers and then forward the requests to the any replication server (in the appropriate ring), based on the ResourceID. The replication servers are arranged in multiple rings where each ring can be accessed by any of the cluster servers. Each ring in a cluster represents a shard (sharding done based on the resource ID) and each replication server contains resource locations. The replication servers inside a ring are mirrors,

that is they contain the same information as all the other replication servers in the same ring (eventually consistent). Replication servers will contain all the resource discovery data required by clients. Clients can read or write on any of the replication server. Whenever update operation is performed by the client on any of the replication servers, the update is synchronized in that ring and then sent to the cluster server for replicating the change in the other rings of clusters of other region. As the load on a particular server increases, the number of cluster servers can be increased to handle multiple requests. If one of the cluster server fails, the other cluster servers will handle the requests coming from the client. So this architecture is fault tolerant as well as scalable.

|

| Figure 4 - Ring Synchronization |

Figure 4 shows that if there is an Update in any of the replication servers then the other servers should also be updated accordingly. So, whenever there is an update in one of the servers, it acts as the leader of the bidirectional ring, and sends the updates to its neighboring servers. In this way all the servers in the ring will remain synchronized.

|

| Figure 5 - Cluster Synchronization between Regions |

As already mentioned above, each region consists of clusters for every Resource Type. The other regions also have an identical set of clusters. The clusters of the same Resource type in different regions are synchronized so all the data updated in one cluster is passed on to every other cluster of the same resource type. Since all clusters are synched, if a cluster of a particular region fails or even if the whole region is down, client requests can be redirected to the next closest region. More details on cluster synchronization will be provided at a later time.

|

| Figure 6 - Region Interaction Topology |

The clusters within each region will sync with only a small subset of neighboring regions, creating a spanning tree of regions as show in Figure 6. With this approach, data in all regions will always remain synchronized and each region only has to update a small subset of the other regions.

Assumptions

For this deliverable, we are assuming that the resources being requested are read-only. Since this paper only describes the resource discovery protocol, there is no need for end users to modify data at this moment. The resource modification techniques will be presented in a future deliverable. We also assume that we have a client application, where the user will browse to find the desired resource type, and then select the resource to discover. The client application will then make a request consisting of a number of fields (User IP, ResourceType, ResourceID) which will be used for request routing.

Overview of Internal Algorithms

In order to make our designed solution work as intended, there are a few different algorithms which will run at the different layers of our architecture, or which will control the interaction between layers.

The first example is what is referred to as the Cluster Sync algorithm. This algorithm is responsible for maintaining consistent clusters across regions. If a resource id is added or removed from a particular cluster, the Cluster Sync algorithm takes care of synchronizing these updates across clusters.

Assumptions

For this deliverable, we are assuming that the resources being requested are read-only. Since this paper only describes the resource discovery protocol, there is no need for end users to modify data at this moment. The resource modification techniques will be presented in a future deliverable. We also assume that we have a client application, where the user will browse to find the desired resource type, and then select the resource to discover. The client application will then make a request consisting of a number of fields (User IP, ResourceType, ResourceID) which will be used for request routing.

Overview of Internal Algorithms

In order to make our designed solution work as intended, there are a few different algorithms which will run at the different layers of our architecture, or which will control the interaction between layers.

The first example is what is referred to as the Cluster Sync algorithm. This algorithm is responsible for maintaining consistent clusters across regions. If a resource id is added or removed from a particular cluster, the Cluster Sync algorithm takes care of synchronizing these updates across clusters.

Another example is the algorithm that creates the spanning tree of regions. Once the regional spanning tree is created, the clusters within the regions will be aware of which other regions they are responsible for updating to ensure data consistency across all regions.

As we know that each ring represents a shard (horizontal partition) of the resource records and the data servers are repositories of the resource records. The data in a ring is replicated among all the data servers which are part of the ring. The process is as follows:

- One data server receives an update (add/edit/delete of a resource location). Call this data server an active data server.

- The active data server propagates this update to its neighbor (two neighbors). Update includes an identification of the active server and the timestamp.

- A neighbor on receiving an update will apply the update locally, if there was no update with the same timestamp and identification received from another neighbor.

- The neighbor then propagates the same update to its neighbor (if it has any neighbor). In this way the data servers in the rings are synchronized to carry the current data. Of course there will be a synchronization delay but the data servers in the rings would be "eventually consistent".

Algorithm Analysis

There are four main algorithms which control the sharing and distribution of resources: the Regional Minimum Spanning Tree, Cluster Synchronization, Ring Synchronization, and finally the Resource Discovery algorithm which allows a user to search for and use a particular resource. The following is our analysis of how each of these algorithms functions, and why we consider each to be robust.

REGIONAL MINIMUM SPANNING TREE

The algorithm that connects various regions across the world is a minimum spanning tree concept which allows the clusters within the regions to be aware of which other regions they are responsible for updating to ensure data

consistency across all regions. At the region level, the most efficient way to create a minimum spanning tree is by using the well-known Asynchronous GHS (AsyncGHS) algorithm. The process of creating the regional spanning tree is as

follows:

There are four main algorithms which control the sharing and distribution of resources: the Regional Minimum Spanning Tree, Cluster Synchronization, Ring Synchronization, and finally the Resource Discovery algorithm which allows a user to search for and use a particular resource. The following is our analysis of how each of these algorithms functions, and why we consider each to be robust.

REGIONAL MINIMUM SPANNING TREE

The algorithm that connects various regions across the world is a minimum spanning tree concept which allows the clusters within the regions to be aware of which other regions they are responsible for updating to ensure data

consistency across all regions. At the region level, the most efficient way to create a minimum spanning tree is by using the well-known Asynchronous GHS (AsyncGHS) algorithm. The process of creating the regional spanning tree is as

follows:

- A single region can contain multiple regional servers, so one server from each region is chosen to be a leader for that particular region. Leader election within a region is assumed to be trivial. Since there will only be a small number of region servers within a region, a leader could be chosen manually.

- The leaders of each region will begin an instance of the AsyncGHS algorithm. All regions are assumed to be connected in a complete graph topology, so core edges will initially be determined by least-cost paths between regions.

- Once the AsyncGHS algorithm has finished the merging and absorption process, the leaders of each region will be aware of which other regions it is directly connected to based on the minimum spanning tree created.

- The leaders of the region will propagate the resulting spanning tree to the other regional servers within its designated region.

Creating a minimum spanning tree to connect the regions together will minimize the number of neighboring clusters that each region is responsible for updating when changes are made. In addition, we assume that larger distances mean greater link cost (either in latency or monetary), so regions far from each other will have a greater cost and hence be less likely to become neighbors. Therefore, geographic separation between regions will ensure that no single region will have too many neighbors.

CLUSTER SYNC

This algorithm is responsible for maintaining consistent clusters across regions. If a resource id is added or removed from a particular cluster, the Cluster Sync algorithm takes care of synchronizing these updates across clusters. Once an MST is created to connect the regions, each region knows what other regions to synchronize. Below are the steps for cluster synchronization:

CLUSTER SYNC

This algorithm is responsible for maintaining consistent clusters across regions. If a resource id is added or removed from a particular cluster, the Cluster Sync algorithm takes care of synchronizing these updates across clusters. Once an MST is created to connect the regions, each region knows what other regions to synchronize. Below are the steps for cluster synchronization:

- When an update is made to any region (suppose Region1), that region should synchronize its neighbors (suppose Region2 and Region3).

- In order to synchronize the neighbor regions, the cluster servers (one or more of a specific resourceType) connect to cluster servers (one or more of the same resourceType) of Region2 and Region3.

- The cluster servers of Region1 will now send the updates to its neighboring cluster servers (of Region2 and Region3).

- The receiving cluster servers then perform the update on an appropriate resourceType shard based on the same consistent hashing strategy as they use to find the resourceType shard for a resourceID.

- Similar process is done at Region2 and Region3 to synchronize their neighbors.

Using this process, all of the regions are synchronized. One reason to synchronize all of the regions is to allow the whole system to function properly in case an entire region goes down. Given this situation, we can route the traffic (of the failed region) to neighbor regions or any region we want.

RING SYNCHRONIZATION

As we know that each ring represents a shard (horizontal partition) of the resource records and the data servers are repositories of the resource records. The data in a ring is replicated among all the data servers which are part of the ring. The process is as follows:

RING SYNCHRONIZATION

As we know that each ring represents a shard (horizontal partition) of the resource records and the data servers are repositories of the resource records. The data in a ring is replicated among all the data servers which are part of the ring. The process is as follows:

- One data server receives an update (add/edit/delete of a resource location). Call this data server an active data server.

- The active data server propagates this update to its neighbor (two neighbors in the ring). Update includes an identification of the active server and the timestamp.

- A neighbor on receiving an update will apply the update locally, if there was no update with the same timestamp and identification received from another neighbor.

- The neighbor then propagates the same update to its neighbor.

In this way the data servers in the rings are synchronized to carry the current data. Of course there will be a synchronization delay but the data servers in the rings would be "eventually consistent".

We will use record based synchronization to synchronize ring servers. In record based sync technique the actual record is transferred from one ring to its neighbors. This technique will increase the network traffic but is good and efficient if we have non-deterministic query functions.

RESOURCE DISCOVERY

Resource Discovery here is used to find appropriate resource for the clients based on their request. The algorithm operates as follows:

Whenever a client wants to use a particular resource it formulates a request for that particular resource type. This request contains Source IP Address, Resource Type & Resource ID. Source IP address is used by the DNS server to determine the closest Region Server for that particular client. Resource type helps in selecting a particular resource type cluster from all the clusters in that region (One Resource cluster Type for every Region). Resource ID helps in selecting a ring of replication servers among all the Rings connected to the cluster server. This selection is done using Consistent Hashing on the Resource ID. The Complete process from initiating a request to allocation of a resource for a client is as follows:

We will use record based synchronization to synchronize ring servers. In record based sync technique the actual record is transferred from one ring to its neighbors. This technique will increase the network traffic but is good and efficient if we have non-deterministic query functions.

RESOURCE DISCOVERY

Resource Discovery here is used to find appropriate resource for the clients based on their request. The algorithm operates as follows:

Whenever a client wants to use a particular resource it formulates a request for that particular resource type. This request contains Source IP Address, Resource Type & Resource ID. Source IP address is used by the DNS server to determine the closest Region Server for that particular client. Resource type helps in selecting a particular resource type cluster from all the clusters in that region (One Resource cluster Type for every Region). Resource ID helps in selecting a ring of replication servers among all the Rings connected to the cluster server. This selection is done using Consistent Hashing on the Resource ID. The Complete process from initiating a request to allocation of a resource for a client is as follows:

- The Client which is in need of a Resource sends a request for that resource with its source IP address, Resource type required and the resource ID.

- The DNS Server first receives this request and selects a region server based on the IP Address of the client which is the closest to the client. Also, it can redirect the client to the next closest Region if the closest regional server is not functional at that time for some reason.

- A Region contains many regional servers. One of the regional server is selected in a Round-Robin fashion. This helps in Load Balancing.

- The Regional server based on the Resource Type requested by the client selects a Resource Type Cluster. There is exactly one Resource type cluster for every resource that a client may want to use in that particular region. So, Cluster Selection can be done by simply mapping the resource type requested to the different clusters in the Region.

- Each Resource type cluster has some cluster servers. One of the server is selected based on Round-Robin Scheme which again helps in Load Balancing of the number of requests coming from the clients.

- The cluster servers are connected to the rings of Replication servers. Number of rings in the region depends upon how frequently clients requests for that resource. Consistent Hashing on the Resource ID is used for selecting a ring. Consistent hashing has many advantages over other hashing techniques. Some properties of consistent hashing make it a different and more improved method than other standard hashing schemes like the Spread, Load, Smoothness, Monotonic, and Balancing.

- Clients can read or write on any of these Replication servers as each server is a mirror of another i.e. all the servers contain the same data and are updated according to a change in other replication server.

The fact that the Resource Discovery algorithm is broken down into regions based on a user’s geographic location, and the round-robin approach to server load balancing ensure that this algorithm performs with an acceptable speed. When this is combined with the consistent hashing technique for locating one particular resource, it ensures that our resource discovery protocol is both fast and scalable.

Example Resource Discovery

The resource discovery protocol which finds and returns resources is currently the focus of our attention. In order to retrieve a particular resource, the algorithm will operate as follows:

Example Resource Discovery

The resource discovery protocol which finds and returns resources is currently the focus of our attention. In order to retrieve a particular resource, the algorithm will operate as follows:

- A user will formulate a request for a particular resource type. This request will consist of multiple parts, including the source IP address (IP), resource type (RTYPE), resource id (RID), and the action being performed (DISCOVER, UPDATE, etc.).

- The user will attempt a connection, which through the local DNS servers will route the user to the nearest region servers. Since there can be multiple servers operating at the region level, the individual region server picked within the chosen region will be based on a round-robin scheme to load balance all incoming requests into the region.

- The region server will receive the request and analyze the RTYPE and action. The region server will know that this is a DISCOVER message (meaning the requesting node is attempting to locate a specific resource), so based on the value of RTYPE, the region server will forward the request to the appropriate resource cluster which services the requested resource type. In a similar manner to the region server operation above, requests will be routed to an individual cluster server within the chosen resource cluster in a round-robin fashion to account for load balancing within the cluster.

- The chosen cluster server will receive the request and analyze the RID. Based on this resource id, the cluster server will know which resource ring to forward the incoming request on to.

- The chosen server in the resource ring will receive the request, and reply to the incoming user directly with the given resource.

That's all for this blog. In the next blog I will give a brief overview of how we thought we could simulate this architecture. We decided to use Amazon EC2 to develop our architecture and the topologies and also presented a way to test the resource discovery request.

References

Note: There may be several issues with the approach and the architecture we presented. There might be several additions or improvements to this architecture. Comments will be appreciated.

References

- Amazon Web Services – Elastic Compute Cloud (EC2) [http://aws.amazon.com/ec2]

- DeCandia, G., Hastorun, D., Jampani, M., Kakulapati, G., Lakshman, A., Pilchin, A., Sivasubramanian, S., Vosshall, P., and Vogels, W. 2007. Dynamo: Amazon’s Highly Available Key-value Store. (Stevenson, Washington, United States, October 14-17, 2007). SOSP’07. ACM Press, New York, NY, 205-220.

Note: There may be several issues with the approach and the architecture we presented. There might be several additions or improvements to this architecture. Comments will be appreciated.

No comments:

Post a Comment

I appreciate your comments/feedback/questions. Please do not spam or advertise.